In today’s post, we will discover how Artificial Intelligence (AI) reinforces stereotypes and biases through AI image generators.

In her book, Algorithms of Oppression: How Search Engines Reinforce Racism, Safiya Noble argues that search engine algorithms such as Google play a crucial role in the general representation of women and minority groups, negative and positive. She also criticised the idea that search engines promote neutrality. According to Noble, search engines reinforce negative stereotypes leading to the normalisation of sexism and racism in the digital sphere. She explains that one of the reasons for that is the algorithms.

(Noble, 2018: 29)

Algorithms are not neutral as they reflect the opinions and beliefs of their programmers and the people who control them. This is also the case for Artificial Intelligence as it relies on algorithms. However, bias and stereotypes in AI exist not only because of algorithms but also because of datasets. This is particularly true in AI image generation as datasets represent millions of real images (impossible to be neutral) that the AI algorithms have been trained on. Thus, if there are biases in datasets, the AI will replicate and reinforce these biases as newly generated images enter datasets after creation.

AI image generator and stereotypes

To demonstrate that AI reinforces stereotypes, I asked ChapGPT, a generative AI chatbot to generate an image of a French woman. The chatbot responds to my demand with this image.

At first glance, it looks like a normal AI-generated image. However, many stereotypes can be highlighted in this image. First, the woman is wearing a beret and a striped sweater, a stereotype of the fashion style in France. On top of the sweater, she seems to wear a trench coat, a symbol of elegance. French women are often seen as dressed up and elegant. Second, the woman portrayed is white. And finally, the background of the image looks like a typical street in Paris. The representation of a French woman by AI is reductive. Many different fashion styles exist in France, the country is constituted of many ethnicities and France is not only Paris. This is only one example among many as AI has a stereotypical view of the world.

The Rest of World analysis

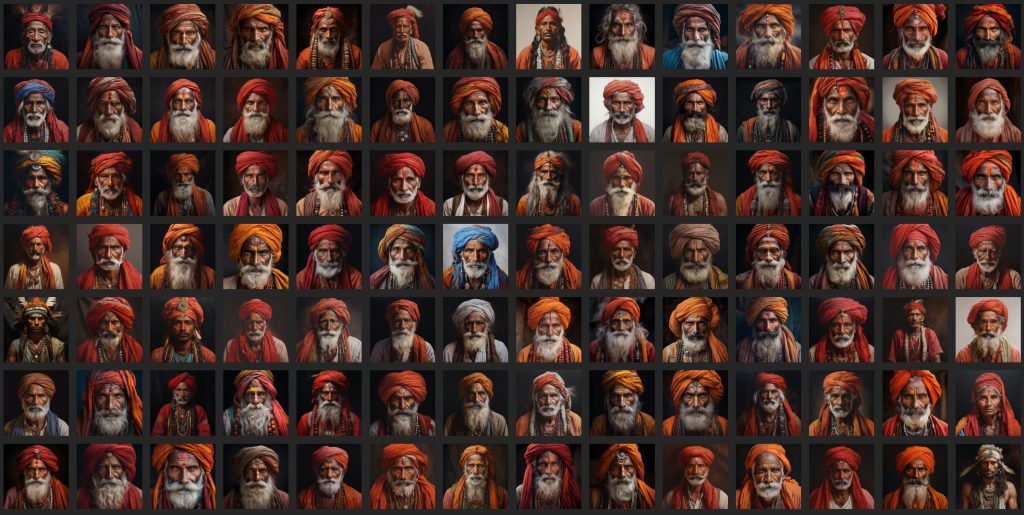

To understand how AI image generators see the world, Rest of World, a non-profit digital media, analysed 3000 AI-generated images created by the five prompts and six countries leading to multiple combinations. For each combination, 100 images were generated resulting in a total of 3000 images. After analysing the images, Rest of World concluded that these images demonstrate a stereotypical view of the world. For instance, an ‘Indian person’ (instruction given to the AI image generator) is usually an old man with a beard wearing a turban.

Thus, representations of the world are biased as AI does not consider the possibility of ethnical and cultural diversity in a country.

Despite showing the world in a reductive way and perpetuating stereotypes, these AI-generated images are not harmful. However, in the case of race and gender, AI-generated images can be harmful. For instance, in an analysis realised by Bloomberg, AI-generated images demonstrated that AI associates race and gender with economic and social status.

Leave a Reply